This a retrieved form of the original article from the series, ‘A Spotlight on Undergraduate Research’.

Edited by Niharika Gunturu.

From being computed by biological neurons to being simulated by software to running on artificial neurons, Computational Fluid Dynamics has come full circle.

Aravinth C K, a 5th-year Dual Degree student from the Department of Aerospace Engineering, gives us a glimpse into his Dual Degree Project – in which he uses the confluence of ML tools and standard Computational Fluid Dynamics to predict and correct the simulations of turbulence and turbulent flows.

‘We actually study rocket science’ is a brag you keep hearing from countless Aerospace engineering graduates and a joke that never seems to get old. I would be lying to you if I say that I do not belong to this group of people trying to glorify their aerospace degree. But I must confess that the research that I am currently pursuing as a part of the curriculum mandated dual-degree project does not necessarily fall under the classification of rocket science. Nonetheless, it is still very exciting for me to work on it and I find it unique.

My work revolves around a word that most of us who have travelled in aeroplanes would have surely come across at some point in their journey. Have you ever experienced the occasional disturbance when you are travelling in an aeroplane? It surely sends a chill down the spine to hear the pilot requesting us to fasten our seat-belts almost instantaneously as a response to such a disturbance. Most often than not the pilot coins the term ‘turbulence’ to explain the reason for the discomfort experienced. My work is pretty much concerned with this very term – turbulence. I deal with the modelling of turbulent flows with the help of simulations accelerated by data-driven techniques. I did not have a clue what the word turbulence meant then, before a formal engineering education, and do not completely understand what it means now, even after me spending several months working on this field. This sentiment is quite common among researchers working in this field. Turbulence stays as an unresolved problem in physics even after several decades of dedicated research in this area.

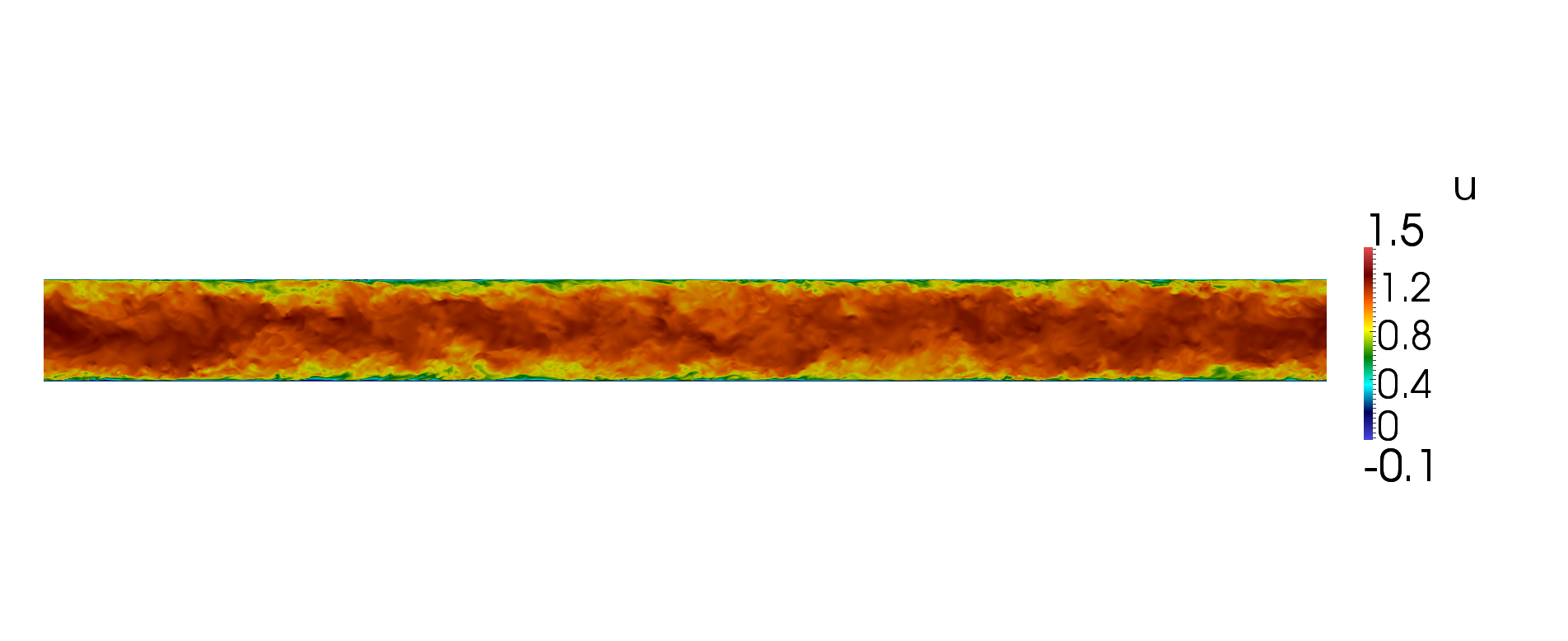

Laminar and Turbulent flows are the two regimes in fluid flows, the first one corresponds to streamlined motion, and the other corresponds to chaotic motion. Just like anything else in science, these flows are also governed by a set of equations. In fact, it is the very same conservation equations in fluid mechanics and specifically the Navier-Stokes equation that helps us study such flows. My research entails the examination of such flows in very rudimentary geometries, like inside that of a channel [Figure 1], a flow which happens to be one of the most extensively investigated in fluid mechanics. Conducting simulations for such geometries and collecting relevant data that could be used later is the bulk of my research focus. In addition to this, I employ tools from Machine Learning to come up with rectified and revised interpretations to these simulations with an intention to make these solutions as closely-matched to the actual truth as possible.

Figure 1: Mean velocity field variation inside a channel flow investigated using RANS simulations.

Machine learning (ML) which forms an integral part of my work is the buzzword these days. The craze for ML stems from its application-oriented nature and ability to provide with endless capabilities to its user. It has found its way in a multitude of applications and research areas, be it from text translations and face recognition in our hand-held mobile devices, to proposing a solution to treat trauma affected patients through past knowledge and experience (basically past data). It is only natural that the implementations from ML got incorporated in the field of Computational Fluid Dynamics (CFD) too.

It is quite intriguing to note that the first papers and articles relating to ML trace back to as early as the 1950 – 60s and yet it is only recently that it has become such an attractive field in science and engineering. It has been taking ginormous strides in enriching the lives of people in the last couple of decades. What took ML so long to attain the popularity that it sees today? What could have possibly changed from the past that helped ML flourish the way it has done today? According to me, the reasons are two-fold – the much-improved processing powers, and the ability to generate large amounts of data and store it compactly.

It is estimated that there has been a trillion-fold increase in computational performance over the last five decades. The reality that the best Apollo (space missions by the US to land a human on the moon) era computers performed instructions hundreds of million times slower than the modern smartphones gives us a feel for the increase in numbers. Moreover, the command module computer used by the astronauts to control the spacecraft during the lunar missions possessed the processing power equivalent to a pair of Nintendo consoles. Such comparisons from two different generations may not always be appropriate and meaningful, but they do provide us with an idea of the technological advancements that we have experienced.

Coming back to my project, my research itself is thoroughly inter-disciplinary in its approach as it requires the integration of knowledge and ideas from both CFD and Machine learning. You might be wondering how one could use ML in an engineering problem pertaining to turbulent flow simulations. Just a glimpse into my research work would give you a much better idea of how this was achieved. The basic idea is to consider two simulation techniques, one far superior and inordinately expensive called as the Direct Numerical Simulations (DNS), when compared to the other more generally known as Reynolds Averaged Navier-Stokes or RANS models. Collect data for the quantity under investigation from both models and then predict the same by staring from the inferior technique, after having learned from the superior technique.

The two-equation k-Ɛ model, a modelling paradigm that falls under the Reynolds Averaged Navier-Stokes (RANS) category, was one of the techniques considered for simulations. As the name suggests, RANS involve time-averaging and the solutions obtained describe the mean flow characteristics such as the mean velocity field. RANS is most commonly used for engineering design purposes. They suffer from the closure problem, incapacitating its ability to solve the problem in hand, hence requiring modelling with assumptions. They can give notoriously unreliable solutions.

On the other hand, Direct Numerical Simulation (DNS) can provide complete information about the flow, that too unaffected by any assumptions, unlike the RANS model. It is an excellent tool for conducting research but comes at a very high computational price (several months on the fastest supercomputers available to us), thereby rendering it infeasible as a general-purpose design tool. For this project, RANS simulations were run on OpenFOAM whereas the DNS solutions were taken from available literature.

After the collection of the data from both RANS and DNS simulations, the two were linked through a deep neural network architecture [Figure 2]. The neural network is used to estimate a parameter in the RANS case that suffers from the assumptions made. The network was trained with velocity gradients obtained from RANS model as inputs while keeping DNS solutions as the ground truth. By incorporating ML in the CFD problem, we were able to build a time-efficient alternative by potentially building a bridge between RANS, a commercially used model and DNS. This also means that we get a thorough understanding of the flow at a much lower computational expense. This project, inspired by the works previously done in this field[1][2], rightly exploits the vast amount of data available that is already available to us from experiments and simulations. However, the robustness of such ML assisted flow modelling should not be overlooked as there are still questions over its potential to predict equally good results for new flows unknown to the neural network model.

Figure 2: Depiction of a neural network architecture. Here, the hidden layers help build a highly non-linear relationship between inputs and outputs. [1]

Through this research, I was able to pursue my passion for working on a project involving multi-disciplinary learning. I believe that traditional boundaries between disciplines is obsolete in this day and age and may be counter-productive in achieving innovative solutions.

With just a little under a semester to go, I am very excited to continue my research, and in the process, I intend to add value to the research community.

Aravinth C K is a final year dual degree student from the department of Aerospace Engineering. The article talks about the project he is pursuing as a part of his master’s thesis under the supervision of Dr. Balaji Srinivasan and Dr. A Sameen, along with the support from Gaurav Yadav, a PhD student from the Department of Mechanical Engineering at IIT Madras.

References:

[1] J. Ling, A. Kurzawski, and J. Templeton (2016) “Reynolds averaged turbulence modelling using deep neural networks with embedded invariance”, Journal of Fluid Mechanics, 807, 155–166.

[2] K. Duraisamy, G. Iaccarino and H. Xiao, “Turbulence Modeling in the Age of Data”, Annual Review of Fluid Mechanics 2019, 51, 1–23.