Design by: Shreethigha G

The razor-toothed piranhas of the genera Serrasalmus and Pygocentrus are the most ferocious freshwater fish in the world. In reality, they seldom attack a human.

In August 2010, Franklin Page of Seattle took 35.54 seconds to type the above sentence, thereby setting a record for the fastest time to type a text message using a touch screen mobile phone.

Franklin’s mark was broken by many people in the next 4 years. In fact, on 7th November 2014, Brazilian teenager Marcel Fernandes Filho did the same in 17.00 seconds, less than half the time it took anyone to do so only 4 years prior. The one thing common to all the record-breakers: they used a swipe keyboard.

Typical keyboards require you to lift your finger each time you type in a new character. On the other hand, Gesture typing allows the user to input a word by drawing a single continuous trace over the keyboard, with the finger needing to be lifted only once a word is completed. It takes advantage of muscle memory, allowing the user to subconsciously memorise input shapes for common words, resulting in improved typing speed and accuracy.

The advantages of gesture typing over conventional typing methods acquire particular significance in Indic language keyboards as Indic languages have a larger character set than English.

For example, the Hindi script contains 52 alphabets as opposed to 26 in English, thus making the keyboard denser and increasing the chances of typing in the wrong character. Unfortunately, the availability of such keyboards is limited to only a few Indic languages, and the accuracy of the Indic versions has been observed to be below par.

These were the issues that ultimately prompted Anirudh Sriram (second year, EE) and Emil Biju (third year, EE) to join Professor Mitesh M. Khapra and Professor Pratyush Kumar from the CSE department and build an architecture that enables swipe typing in Indic languages under their guidance.

The Project

Their work proposed a solution to three tasks:

(i) English-to-Indic Decoding to predict an Indic word from the gesture input provided to an English character keyboard with phonetic correspondence to the intended Indic word. When examining general trends in user preferences, it was observed that while a user may intend to get typed-in words displayed on the screen in an Indic language, he/she may want a QWERTY keyboard with English characters due to the familiarity of usage. Hence, to get the word भारत displayed on the screen, the user might simply want to swipe through the letters B-H-A-R-A-T instead of finding the letters on an indic keyboard and taking up unnecessary time.

(ii) Indic-to-Indic Decoding to predict an Indic word from the gesture input provided to an Indic character keyboard.

(iii)Developing a Spelling Correction module that augments the transliterated output and generates the closest meaningful word from the vocabulary. In case of inadvertent errors while swiping, a spelling correction module helps correct the typed word to the closest word in the vocabulary and saves time that would otherwise have been spent on retyping the same word.

A modular approach was taken to development with a separate module for each task:

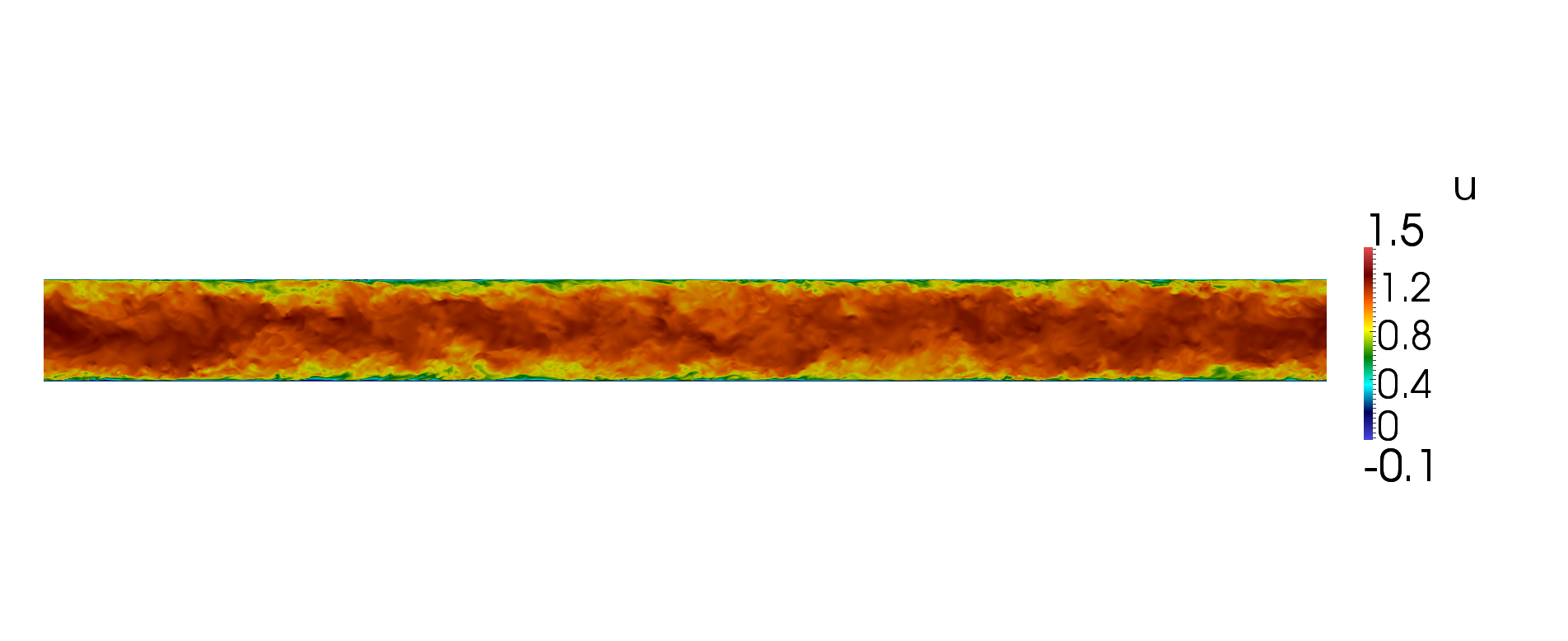

Swipe Path Decoding module:

In order to develop a swipe keyboard of any kind, one must first develop a Swipe Path Decoding model that takes a sequence of (x, y) coordinates that inform the trajectory of the path traced by the user on the keyboard as input.

While prior work on Swipe keyboards has used LSTM and RNN-based architectures for inferring character sequences from (x, y) path coordinate sequences, this project introduced a joint LSTM-Transformer model to accomplish this task with higher accuracy. The Transformer is a powerful neural network architecture that has led to state-of-the-art results in several natural language processing and sequence learning tasks. Using the Transformer, the model was allowed to adapt to multiple languages with varying linguistic complexities and co-character dependencies, resulting in a highly generalisable architecture that performs well across different languages.

Spelling Correction module:

To perform spelling correction with minimal latency and high accuracy on a mobile device, a model that adapts well to errors that are more likely in a swipe typing setup was developed.

Taking inspiration from how word embeddings like Word2Vec and GLoVE capture semantic similarity with geometrical closeness between word vectors, the team developed spelling-aware word embeddings that model closeness in their spellings with geometrical proximity in the vectors. To accomplish this, they modified the ELMo network used for generating word embeddings into a character level network, which takes each character as a single element of the input sequence and sums them to get a spelling-aware word embedding.

The advantage of using ELMo is that the generated embeddings are dependent on the relative positioning of each sequence element, thus allowing better spelling representations.

Creating an Indic language swipe Dataset:

When they were starting the project, the team observed a scarcity of large, well-curated datasets for training swipe-typing models in Indic languages. Hence, they devised a novel method of simulating a keyboard-swipe input by a human for a given word and keyboard layout.

The inspiration behind the work was the motor control principle of the human brain that states that human body movements tend to take the path of minimum jerk (where jerk is the rate of change of acceleration).

Thus, to simulate swipe movements for inputting a word, a path of minimum jerk that connects the key positions of the character sequence of the word on the keyboard layout was generated. Minor perturbations were added to model real-world noisy conditions.

This dataset, spanning 7 Indic languages – Hindi, Bengali, Gujarati, Tamil, Telugu, Kannada and Malayalam – has been open-sourced to support further research in this area.

Results

The performance of the model on 7 Indic languages was analysed, and the model was observed to perform competitively on all of them, with the accuracy of correct word detection varying from 70-89%.

Besides, it demonstrated higher accuracy in English swipe typing in comparison with other well-known models, something which could be attributed in large part to the use of the Transformer model and the novel spelling correction approach adopted.

This project was started in February 2020 and was completed by July 2020, and a research paper on their work has been published at COLING (The International Conference on Computational Linguistics). You can view their publication at this link.

Going further, Emil and Anirudh aim to extend their work to support visually impaired users and diversify their dataset to a larger set of languages.

Edited by: Amrita Mahesh

This series on Spotlight for Undergraduate Research focuses on some interesting and exciting research work by undergraduates in insti. We hope that it promotes better awareness on UG research as well as motivates UG people to perform enriching research at some point in their insti lives.